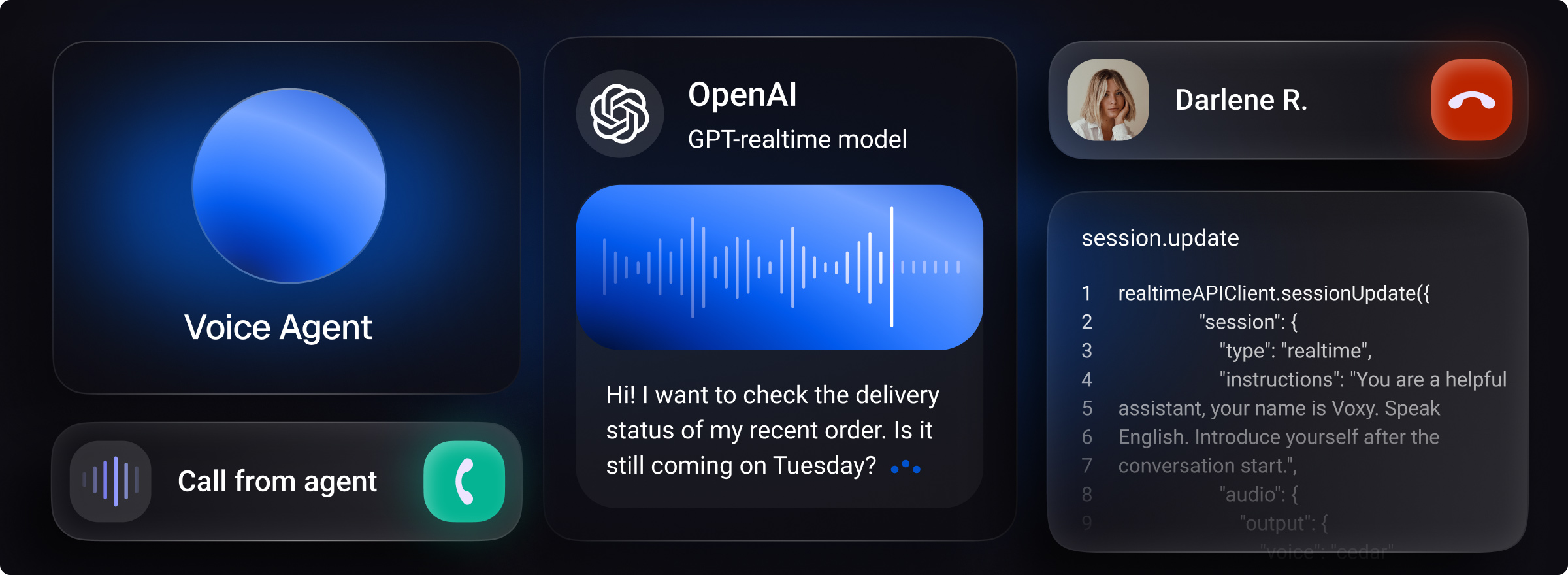

We have updated our OpenAI Realtime API Client to align with the recently released general availability version of the OpenAI Realtime API. While the Beta namespace is still available, we recommend switching to the new OpenAI.RealtimeAPIClient class instead of OpenAI.Beta.RealtimeAPIClient to make full use of the updated model features.

OpenAI’s Realtime API provides speech-to-speech Voice AI interaction. Voximplant’s OpenAI Client connects the OpenAI’s Realtime API to phone numbers, SIP-based systems, WhatsApp, WebRTC clients, and native mobile devices. Voximplant’s serverless architecture handles media conversion. Session parameters, message objects and events remain aligned with the OpenAI’s Realtime WebSocket API.

A demo is available by calling +1 909 318-9013 or +1 888 852-0965 on WhatsApp.

Highlights

- Voximplant supports the new gpt-realtime and gpt-realtime-mini models in both the GA and Beta namespaces

- Beta namespace remains available and continues to mirror OpenAI’s Realtime Preview shape while it is supported by OpenAI

- OpenAI’s new Cedar and Marin voices are supported in both the GA and Beta interfaces

- The OpenAI.RealtimeAPIClient supports the new transcription-only mode

- New gpt-realtime features are fully supported: asynchronous function calling, MCP tool calls, reusable prompts, and improved tracing

- Voximplant does work with OpenAI’s new SIP interface, but we strongly recommend using the OpenAI.RealtimeAPIClient to reduce server infrastructure and simplify model interaction

Developer notes

- The Beta namespace remains available and continues to mirror OpenAI’s Realtime Preview shape — if your code uses OpenAI.Beta.*, nothing breaks

- New session update shape aligns with the GA API is covered in our migration guide:

- Session

typeis required by OpenAI - Voximplant will default this torealtimeand log a warning if this is not provided - Use the nested

sessionobject - Specify the output voice via

session.audio.output.voice - Specify the audio transcription via the

session.audio.input.transcriptionobject that specifies the transcriptionmodel— currentlywhisper-1,gpt-4o-mini-transcribe, orgpt-4o-transcribe

- Session

- We have added a new Transcription mode example to our OpenAI Guide

- See our API reference documentation for the complete interface definition and list of events

Basic example

require(Modules.OpenAI);

VoxEngine.addEventListener(AppEvents.CallAlerting, async ({ call }) => {

let realtimeAPIClient = undefined;

let greetingPlayed = false;

call.answer();

const callBaseHandler = () => {

if (realtimeAPIClient) realtimeAPIClient.close();

VoxEngine.terminate();

};

call.addEventListener(CallEvents.Disconnected, callBaseHandler);

call.addEventListener(CallEvents.Failed, callBaseHandler);

const OPENAI_API_KEY = 'PUT_YOUR_OPENAI_API_KEY_HERE';

const MODEL = "gpt-realtime";

const onWebSocketClose = (event) => {

// Connection to OpenAI has been closed

VoxEngine.terminate();

};

const realtimeAPIClientParameters = {

model: MODEL,

apiKey: OPENAI_API_KEY,

type: OpenAI.RealtimeAPIClientType.REALTIME,

onWebSocketClose

};

try {

// Create realtime client instance

realtimeAPIClient = await OpenAI.createRealtimeAPIClient(realtimeAPIClientParameters);

// Start sending media to the call from an OpenAI agent

realtimeAPIClient.sendMediaTo(call);

realtimeAPIClient.addEventListener(OpenAI.RealtimeAPIEvents.Error, (event) => {

Logger.write('===OpenAI.RealtimeAPIEvents.Error===');

Logger.write(JSON.stringify(event));

});

realtimeAPIClient.addEventListener(OpenAI.RealtimeAPIEvents.SessionCreated, (event) => {

Logger.write('===OpenAI.RealtimeAPIEvents.SessionCreated===');

Logger.write(JSON.stringify(event));

// Realtime session update - change voice and enable input audio transcription

realtimeAPIClient.sessionUpdate({

"session": {

"type": "realtime",

"instructions": "You are a helpful assistant, your name is Voxy. Speak English. Introduce yourself after the conversation start.",

"audio": {

"output": {

"voice": "cedar"

},

"input": {

"transcription": {

"model": "whisper-1",

"language": "en"

}

}

},

"turn_detection": {

"type": "server_vad",

"create_response": true,

"interrupt_response": true,

"prefix_padding_ms": 300,

"silence_duration_ms": 200,

"threshold": 0.5

}

}

});

// Trigger the agent to start the conversation

const response = {};

realtimeAPIClient.responseCreate(response);

});

// Interruptions support: clear the media buffer in case of OpenAI's VAD detected speech input

realtimeAPIClient.addEventListener(OpenAI.RealtimeAPIEvents.InputAudioBufferSpeechStarted, (event) => {

if (realtimeAPIClient) realtimeAPIClient.clearMediaBuffer();

});

// Start sending media to the OpenAI agent from the call after the prompt playback

realtimeAPIClient.addEventListener(OpenAI.Events.WebSocketMediaEnded, (event) => {

if (!greetingPlayed) {

greetingPlayed = true;

VoxEngine.sendMediaBetween(call, realtimeAPIClient);

}

});

} catch (error) {

// Something went wrong

Logger.write(error);

VoxEngine.terminate();

}

});

Links

- Usage examples for the new Voximplant new OpenAI Realtime API GA client: https://voximplant.com/docs/voice-ai/openai/realtime-client

- Step-by-step Beta to GA migration guide for Voximplant new Realtime GA client: https://voximplant.com/docs/voice-ai/openai/migration-guide

- OpenAI Realtime API Client product page: https://voximplant.com/products/openai-client

- Voximplant getting started guide for the OpenAI client: https://voximplant.com/docs/voice-ai/openai

- Voximplant API reference: https://voximplant.com/docs/references/voxengine/openai

- OpenAI gpt-realtime GA announcement: https://openai.com/index/introducing-gpt-realtime/

- OpenAI Realtime API overview: https://platform.openai.com/docs/guides/realtime