Dialogflow connector

Use this block to connect a Dialogflow agent with a call in your scenario.

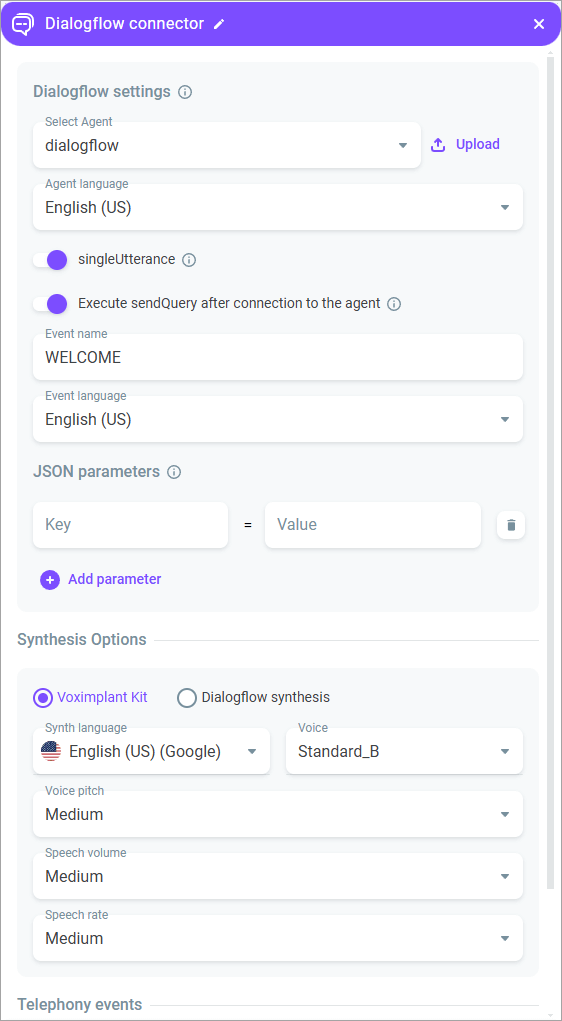

To configure the Dialogflow agent, do the following:

- Connect the block using the Out and Transfer call ports.

- Double-click the Dialogflow Connector block.

- Select an agent from the Select agent drop-down list.

- Select the language from the Agent language drop-down list.

Create and configure the Dialogflow agent in your Google account, save it as JSON, and then upload to your scenario.

- Enable the singleUtterance switch if you want the system to detect when a speaker has spoken a single utterance and to automatically end recognition returning the final ASR result. You typically use this setting for short customer replies: yes/no, service quality assessments, etc.

- Enable the Execute sendQuery after connection to the agent switch to activate immediate start of the Dialogflow after the script reaches the Dialog Connector block.

- Enter the name of the event in the Event name field.

- Select the language in the Event language field.

- In the JSON parameters section, configure keys and values to send the required information from your scenario to the agent.

- In the Synthesis options section, select how you want to synthesize speech for the agent: via Voximplant Kit or using Dialogflow synthesis.

- If you select Voximplant Kit, configure the synth language and voice. Depending on the TTS-provider, you can also configure advanced settings:

Voice pitch - Configure the synthesized voice pitch (Google). Available options: x-low, low, medium, high, x-high, default.

Speech volume - Set the speech volume (Google). Available options: silent, x-soft, soft, medium, loud, x-loud, default.

Speech rate - Set the synthesized speech speed (Google, Yandex). Available options: x-slow, slow, medium, fast, x-fast, default.

Emotions - Configure the synthesized voice sentiment (applicable for specific Yandex voices). Available options: neutral, good, evil.

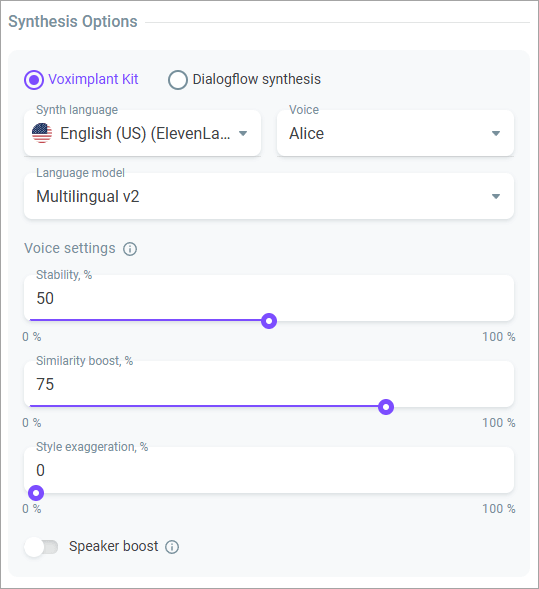

If you select the ElevenLabs TTS-provider, additional speech synthesis settings are available:

Language model - The model understands which language you use and generates audio accordingly.

Stability - Allows you to adjust the degree of the voice emotionality. The higher the stability, the more restrained and calm the voice becomes. Lowering the setting introduces a broader emotional range.

Similarity boost - Allows you to adjust the level of clarity and similarity of the voice. If similarity is set too high, the AI may reproduce artifacts from low-quality audio.

Style exaggeration - Allows you to amplify the style of the original speaker.

Speaker boost - When enabled, allows you to make TTS speech sound more human-like. Enabling the setting may slightly increase the speech synthesis time.

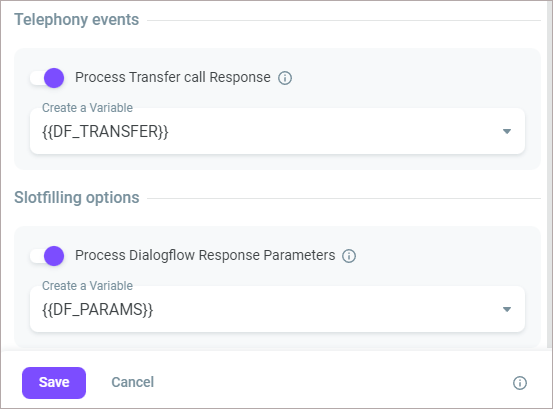

- Enable the Process transfer call response switch if you need to forward a call. Enter or select a variable from the Create a variable field.

- Enable the Process Dialogflow response parameters switch to get all data from the agent and use it later in the scenario. Enter or select a variable from the Create a variable field.

- Select Save.