Summary

A Voice AI Orchestration Platform (VAIOP) is a critical control layer for implementing Voice AI pipelines and connecting to communications systems. These platforms coordinate the selection of large language models (LLMs) and speech engines, manage conversational turn-taking, route audio media, invoke external tools, and enforce compliance, enabling organizations to deploy robust and scalable voice agents in production environments. This blog explores the emergence of VAIOPs, their core architectural components, and compares them with other build approaches such as CPaaS and agent builders. Additionally, this post discusses the key benefits for engineering and operations teams, addresses major LLM voice challenges, provides a buyer's checklist, and outlines Voximplant's unique approach to building effective voice AI solutions.

Table of contents

1. Definition

2. Why VAIOPs emerged

3. Core architecture

4. Build approaches: CPaaS vs VAIOP vs agent builders

5. Benefits for engineering and ops

6. Buyer checklist

7. Voximplant’s approach

8. FAQ

Definition

A Voice AI Orchestration Platform coordinates all components of a real‑time voice agent:

- Large Language Model (LLM) selection and prompting

- Speech‑to‑Text (STT) and Text‑to‑Speech (TTS) selection and switching

- Turn‑taking logic and media routing

- Tool and data access, plus logging, testing, and observability

- Phone system connectivity and telephony control (PSTN, SIP, WebRTC)

It abstracts vendor differences while keeping options open, so teams can mix and match engines within a call and evolve as models and prices change.

Why VAIOPs emerged

Telephony automation has evolved from DTMF and IVR in the 1970s through Natural Language Understanding (NLU) systems in the 2010s to today’s transformer‑based Large Language Models (LLMs). LLMs interpret broader inputs, require less manual labeling, and can ground answers via retrieval, but they introduce new operational risks and technical challenges—especially in live voice conversations. The gap between raw AI capability and production needs has created the need for dedicated orchestration. This is particularly true for telephony integration with its diverse ecosystem and demanding latency requirements.

NLUs match utterances to predefined intents. LLMs reason over open‑ended input, optionally with tools to interact with external APIs and Retrieval-Augmented Generation (RAG) to provide outside data. LLMs flexibility speeds development and provides a natural experience, but raises questions around hallucinations, determinism, and context window management in real‑time. A VAIOP facilities addressing Voice AI ‘s challenges without forcing a single vendor stack.

Core architecture (Voice AI + telephony stack)

Most production voice agents incorporate several distinct elements:

- Large Language Models (LLM): Interprets intent, plans steps, and generates responses.

- Speech-to-Text (STT): Converts caller audio to text for LLMs or analytics.

- Text-to-Speech (TTS): Synthesizes the agent’s response into natural speech.

- Turn‑taking: Voice activity detection and barge‑in policies to stay conversational.

- Telephony gateway: Bridges PSTN/SIP/WebRTC and manages signaling and media.

- Orchestration: Selects models and speech engines, routes audio/text streams, injects prompts, invokes tools, and enforces policies.

Modern platforms also provide agent management, prompt and data tooling (including RAG), tool invocation governance, compliance features, testing harnesses, and production observability, often aligning with interfaces like the Model Context Protocol (MCP).

Voice AI for Telephony Stack

Voice AI for Telephony Stack

Build approaches: CPaaS vs VAIOP vs agent builders

There are several ways to assemble agents:

- Communications Platform-as-a-Service (CPaaS): Maximum flexibility, but integration complexity increases as you stitch telephony, STT/TTS, LLMs, turn‑taking, and tools.

- Voice AI Orchestration Platform: Purpose‑built to orchestrate LLMs, speech, turn‑taking, prompts, data, tools, and telephony. Often provides APIs plus low‑code options.

- Agent builders: Simplify build time but usually lock you into a single stack with limited extensibility.

- Vertical agents: Ready‑to‑use for a niche (e.g., restaurants, collections) but typically no API.

A VAIOP balances choice with speed. It lets you test engines, switch vendors, and fail over without re‑architecting.

Benefits for engineering and ops

- Telephony connectivity: Manage numbers, DID, SIP trunks, and browser calling in one place.

- Telephony control: Fine‑grained control over signaling, media, and reachability.

- API abstraction: One API for many LLM and speech vendors.

- Redundancy: Automatic fallback across speech or telephony providers.

- Vendor flexibility: Swap STT, TTS, and LLMs as needs or pricing change.

- Mix‑and‑match: Use different engines per language, persona, or even mid‑call.

- Unified analytics: End‑to‑end metrics independent of any vendor’s portal.

This approach assumes rapid AI evolution and avoids betting the roadmap on one provider.

Buyer checklist

Telephony & reachability

- Global phone numbers

- Direct Inward Dial (DID) and outbound call management

- Strong SIP support - SIP trunking, registrar support, TLS/SRTP options, DTMF handing

- WebRTC for browser and mobile SDKs

- WhatsApp Business calling support (if needed)

- Recording, retention, and compliance features

Speech quality & latency

- Multiple STT/TTS vendors with advanced tuning (phrase hints, multi‑language, dictionaries)

- Wideband/HD audio where carriers and WebRTC allow

- Multi‑region routing to keep users, LLMs, and speech engines close

LLM flexibility

- Direct integrations with real‑time LLM agent APIs

- Lightweight wrappers that do not limit or hide provider‑specific capabilities

- Media over WebSockets for custom or third‑party real‑time models

Developer experience

- Runtime for call logic – ideally serverless to minimize telephony operations

- First‑class SDKs and CI/CD support

- Clear pricing and usage analytics

Voximplant’s Voice AI Orchestration Platform

Voximplant’s platform enables developers to build and scale Voice AI agents for real-time communications.

Developer‑first platform

Voximplant ships a serverless, JavaScript environment for call control, storage, and key-value data. SDKs and client libraries cover iOS, Android, Web, React Native, Flutter, and Unity, plus server libraries for popular languages.

Expose the full power of real‑time LLMs

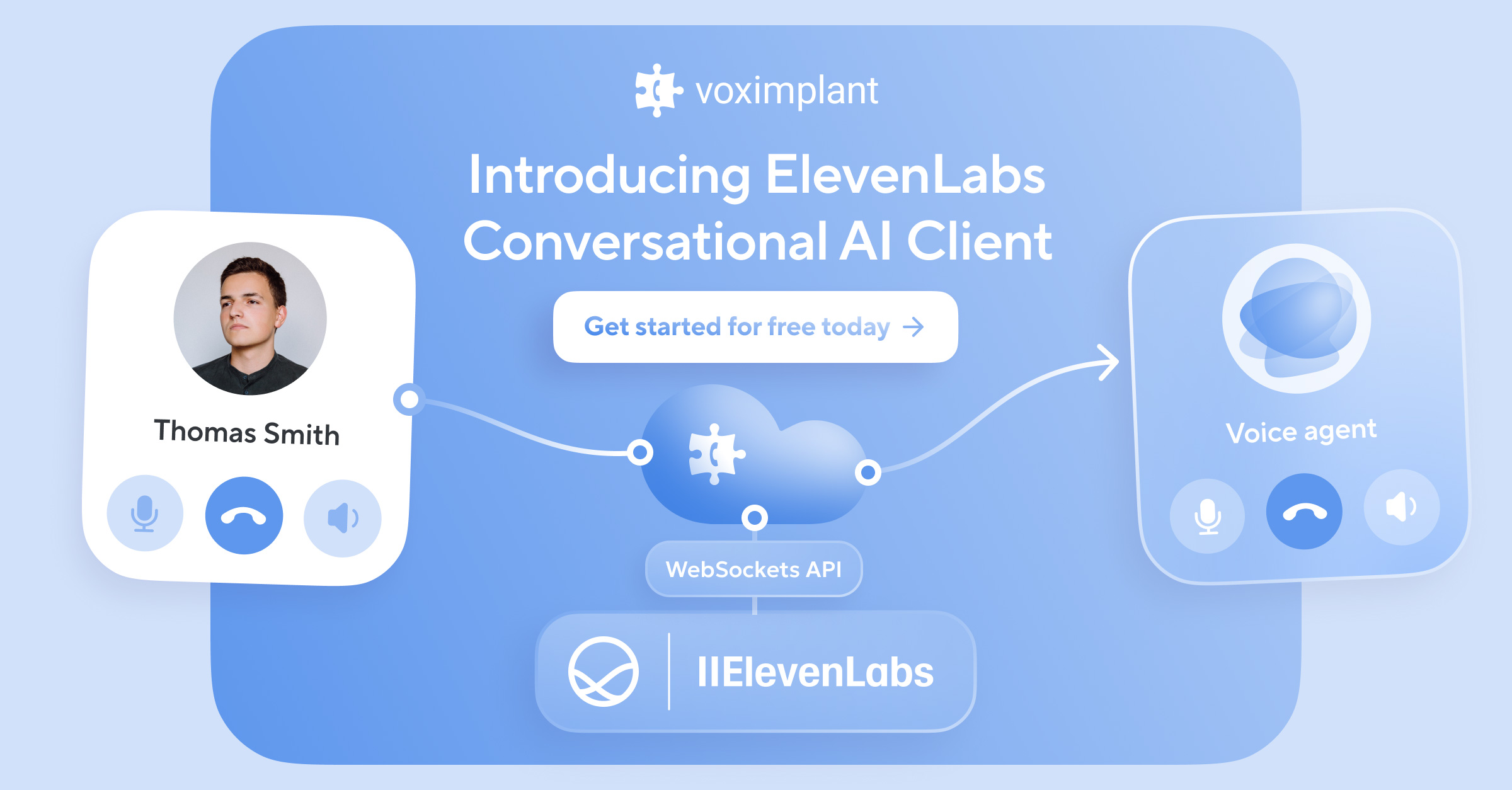

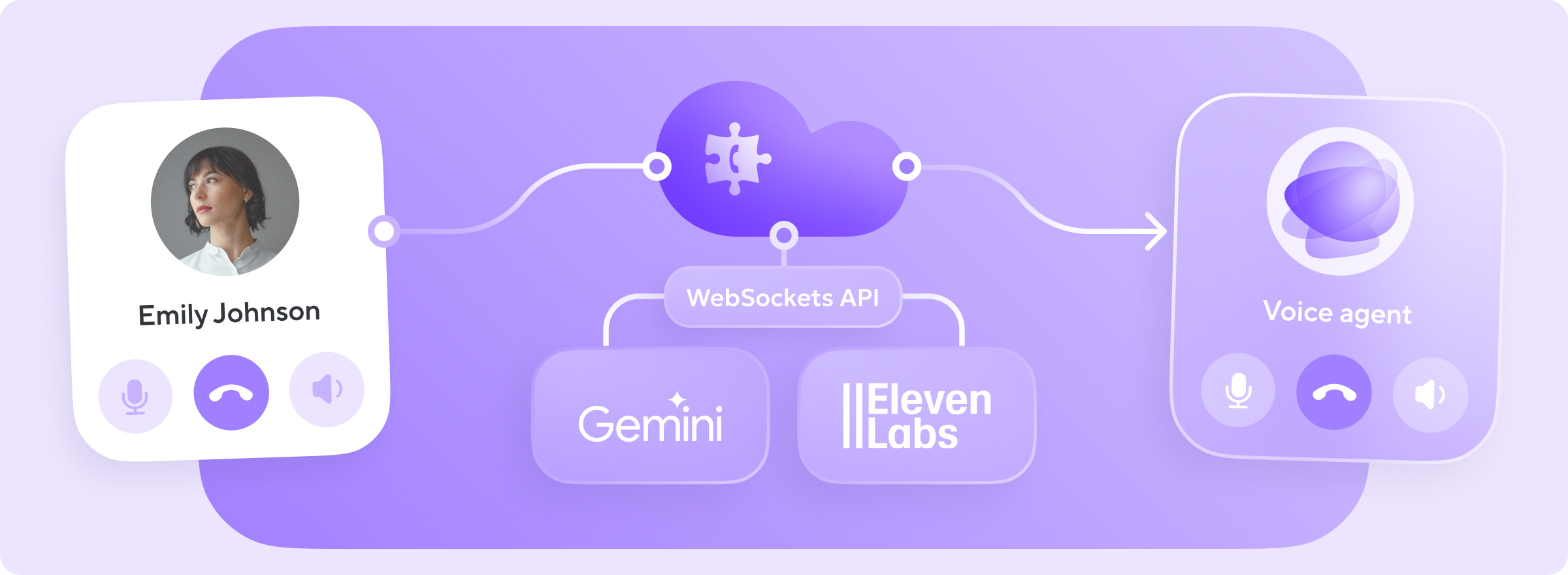

Direct agent integrations include Google Gemini Live, ElevenLabs Conversational AI, OpenAI Realtime API (beta), and Ultravox, plus Google Dialogflow ES and CX. A Media‑over‑WebSockets gateway enables connectivity to other real‑time AI systems.

Speech quality focus

Voximplant supports multiple STT providers and exposes advanced features like phrase hints, weighted dictionaries, and multi‑language input. The platform integrates nine TTS providers with hundreds of voices across most languages, including state‑of‑the‑art models. Wideband/HD audio is supported with carriers that enable it and natively in WebRTC.

Global low‑latency network

Voximplant operates a distributed network with 15 data centers across five continents.

Telephony integrations.

- Phone numbers in 100+ countries with support for toll‑free, SMS and MMS messaging

- DID with queueing and outbound tools such as call lists and answering‑machine detection

- SIP trunking, registrar, and SIP calling with robust interoperability support

- Registration to third‑party PBXs so AI agents can act like human agents

- WebRTC for browser and app calling, including a click‑to‑call widget

- Support for WhatsApp Business calling

More information

See the new voximplant.ai website to learn more!

FAQ

What is a Voice AI Orchestration Platform in one sentence?

A VAIOP is the control plane that connects telephony to AI, selects and switches LLM/STT/TTS in real time, manages turn‑taking, and governs tools and data for safe, reliable conversations.

How is it different from a CPaaS?

A CPaaS gives raw building blocks for voice and messaging. A VAIOP layers AI‑specific orchestration on top—prompt and context management, engine selection, tool governance, testing, and analytics—so you spend less time on glue code.

Do I still need NLU if I’m using an LLM?

Many teams mix approaches. NLUs can be fast and deterministic for narrow tasks. LLMs handle open‑ended input and tools. A VAIOP lets you choose per flow and even per turn.

What latency should I target?

Aim under ~400 ms mouth‑to‑ear to avoid disruption, ideally less for natural cadence. Optimize model placement, speech engine choice, and routing paths, and use HD/wideband audio where possible.

Can I keep my existing numbers and PBX?

Yes. Look for DID management, SIP trunking/registrar support, and the ability to register to third‑party PBXs so AI agents can join call flows like human agents.